|

Cryptome DVDs are offered by Cryptome. Donate $25 for two DVDs of the Cryptome 12-years collection of 46,000 files from June 1996 to June 2008 (~6.7 GB). Click Paypal or mail check/MO made out to John Young, 251 West 89th Street, New York, NY 10024. The collection includes all files of cryptome.org, jya.com, cartome.org, eyeball-series.org and iraq-kill-maim.org, and 23,000 (updated) pages of counter-intelligence dossiers declassified by the US Army Information and Security Command, dating from 1945 to 1985.The DVDs will be sent anywhere worldwide without extra cost. | |||

8 May 1998

High-performance Computing, National Security Applications,

and

Export Control Policy at the Close of the 20th Century

45

As discussed in Building on the Basics [1,2], there are levels below which a control threshold is not viable. At these levels, the policy seeks to control that which is uncontrollable and becomes ineffective. The 1995 study discussed in some depth the factors that influence controllability and made determinations regarding what performance levels were, and were not, controllable. In this chapter we refine the discussion and elaborate upon some of the ideas presented in the earlier work. We conclude with a discussion of a number of options for policy-makers regarding the lower bound of the range within which viable control thresholds may exist.

Kinds of Controllability

During the 1990s, the definition of controllability has become considerably more complex than it was in previous decades. In particular, industry-wide trends towards parallel processing and systems scalability make it necessary to make a distinction between two related, but not interchangeable concepts:

1. controllability of platforms, and

2. controllability of performance levels.

The first refers to the ability to prevent entities of national security concern from acquiring and using hardware/software platforms for unsanctioned purposes. The latter refers to the ability to prevent such entities from acquiring a particular level of computational capability by any means they can. In the past, the distinction between the two was minimal; today, it is critical. We explain each concept in more depth below.

Controllability of Platforms

The controllability of platforms refers to the extent to which the export and use of computing systems can be adequately monitored and regulated. When systems are highly controllable, the probability that diversion can be prevented is close to 100%. The cost of carrying out a successful diversion and maintaining the system may be so nigh that, given the low odds of success, an effort is not seriously undertaken. For systems that have low controllability, the premium in extra time and money expended for a diversion may be so small, and the barriers to diversion so low, that successful diversion is likely to occur with some regularity, regardless of what the export control policies are.

While there is no precise, widely agreed upon means by which controllability can be assessed and measured, there is agreement that platform controllability is a function of a number of factors. These include

46

Size. Small units are more easily transported than large ones. Today's

computers fall into a number of categories, shown in Table 4, based on the

size and nature of the chassis.

| Chassis | Sample Systems | Commentary |

| Proprietary | Most Cray and HP/Convex, CM-5 |

Many high-end systems have a unique and distinctive appearance, due to their highly customized chassis. The chassis is designed primarily to meet the technical requirements of the system, and to offer an attractive appearance. The physical size can range from rather large to rather small. The Cray C90 has a footprint of 80 ft2 including the I/O subsystem, while a Cray-2 has a footprint of only 16 ft2. To be sure, many proprietary systems require extensive cooling and I/O subsystems that occupy additional space. |

| Multiple-rack | IBM RS/6000 SP (SP2), Origin2000 |

The basic physical chassis is a rack. Vendor racks differ from one another and are certainly not interchangeable, but they are rectangular: 5-7 feet high, 2-4 feet wide, and 3-4 feet deep. Large configurations consist of multiple, interconnected racks. In the extreme, configurations can be huge. The ASCI Red (Intel) configuration at Sandia National Laboratories has a footprint of 1,000 square feet. |

| Single-rack | PowerChallenge XL, AlphaServer 8400, Ultra Enterprise 6000, IBM R40, HP T- 500, T-600 |

The physical chassis is a rack, as just described. The system may not scale beyond a single rack. If it does, the multi-rack configurations have substantially different programming paradigms or performance characteristics than the single-rack system. The Silicon Graphics PowerChallenge Array is a good example. The individual PowerChallenge racks are shared-memory systems, while the array offers a distributed- memory paradigm. |

| Deskside | Origin200 dual- tower, PowerChallenge L and Challenge, AlphaServer 4100 and 2100, IBM J40, Ultra Enterprise 3000, 4000. |

The desk-side chassis rarely stands taller than three feet. Such systems are highly compact and portable. They typically offer multiprocessing capability, with maximum numbers of processors ranging from two to eight. |

| Desktop | Numerous uniprocessor workstations, some dual-processor workstations. |

The desktop chassis is the most popular for today's single- user workstations and personal computers. The number of processors is typically one or two. "'Tower" cabinets that may sit beside a desk for convenience fall into this category as well. |

Table 4 HPC chassis

47-48

Infrastructure requirements. The principal distinction in infrastructure requirements is between systems that are air-cooled, and those that require liquid cooling. Air-cooled units are easier to install and maintain. Liquid-cooled units, e.g. the Cray-2, can require extensive cooling systems whose size, power, and maintenance requirements exceed that of the computer itself.

A dominant trend in high-performance computer manufacturing has been the use of CMOS rather than Emitter-Coupled Logic (ECL) components. The latter has been used in the past because it offered higher speeds. However, it also required substantially more power per unit of volume and therefore required liquid cooling systems. The economics of high-performance computing has forced all HPC vendors to offer CMOS systems and reduce the number of models employing the more costly ECL.

Vendor dependence. The more dependent a system is on the vendor for installation, maintenance, and operation, the more controllable the system is. Clearly, periodic visits to an installation provide a vendor with an excellent opportunity to assess not only the location of a system, but also the nature and extent of its use. The more frequent and involved the site visits, the more closely the vendor can monitor the usage of a system. Two extremes illustrate the range of "care and feeding" that systems require. On the one hand, large, customized configurations of pioneering systems such as those developed under the Accelerated Strategic Computing Initiative (ASCI) program require continuous, on-site presence by vendor representatives. Large-configuration, but commercially available systems might involve vendor representatives intensively during system installation, acceptance testing, and upgrading. During routine operation, skilled employees of a systems integrator (e.g., Nichols Research, Northrop Grumman) monitor and operate the system. At the other extreme, personal computers sold through mail-order companies usually require no vendor involvement once the package leaves the warehouse. Users are responsible for, and usually capable of managing the installation, upgrade, operation, and maintenance of such systems. Moreover, an extensive infrastructure of third-party companies providing support services exists throughout the world.

Between these two extremes are a number of degrees of customer dependence on vendors. These can be classified using the categories shown in Table 5, which are ranked from greatest dependence to least:

| Vendor Dependence |

Sample Systems | Commentary |

| Total | Cray C90 and T90, large configurations of massively parallel platforms such as the SP2. Possibly Cray T3D and T3E, depending on capabilities of user. |

The customer is totally dependent on the vendor. The vendor, or a vendor representative, performs all installation, operation, and maintenance tasks on a continuous basis. Users have no direct interaction with the system. Jobs submitted for runs are loaded and executed by vendor representatives. |

| High | Cray J90, HP/Convex Exemplar, IBM's RS/6000 SP (formerly called the SP2), Cray Origin2000. |

The vendor installs, maintains, and upgrades the system, but the customer may manage the system during routine operation and perform software upgrades. |

| Moderate | DEC's AlphaServer 8400. SGI Challenge XL, PowerChallenge XL, Sun's Ultra Enterprise, HP's T-series |

The vendor typically installs the system and provides supporting services during unusual circumstances, such as system failure, or extensive upgrades The customer may perform routine maintenance and simple CPU and memory upgrades. Third-party companies may provide such services as well as the vendor. |

| Low | AlphaServer 2100, AlphaServer 4100, SGI Challenge L, PowerChallenge L |

The vendor may provide advisory services as requested by the customer. Customers with in-house expertise can function well without such services, while customers with little expertise may rely on them more heavily. An infrastructure of large and small companies providing this expertise may exist. |

| None | Many single and dual- processor workstations and most personal computers and Intel-based servers fall in category. |

The vendor is not usually involved in installing, maintaining, or operating the system. The typical customer does not need such support. Third-party individuals or companies may provide any services this necessary. |

Table 5 Categories of dependence on vendors

48-49

Installed base. It is probably not possible to determine precisely at what point the number of installations becomes so large that the vendor loses the ability to track the location and use of each unit. Such a point is a function not only of the number of units installed, but also of the nature of the distribution and supporting services networks, and the amount of resources the vendor is willing to bring to bear on the problem. However, it is helpful to classify a given model's installed base in one of several categories. Table 6 provides a possible classification of the installed base, and identifies some representative systems for each category, circa Fourth Quarter 1997.

| Installed Base | Commentary |

| 10s | Traditional supercomputers lie in this category. Vendors have a precise

understanding of where individual systems are located |

| 100s | This category often includes high-end systems that have been shipping

only a few months, but which may eventually enjoy a substantially larger installed base. This category also includes a number of systems pioneering new markets (e.g. commercial HPC) for their vendors. Vendors usually are able to monitor individual installations with a fair degree of confidence |

| 1000s | Most high-end servers fall in this category. It is a gray area. Monitoring

of individual installations becomes difficult, but may be possible, depending on the nature of the vendor's sales and support infrastructure. When the installed base reaches high 1000s, there is a high probability that there will be leaks in control mechanisms |

| 10,000s | Most mid-range, desk-side servers fall in this category. Systems may

be considered mass-market products. As a practical matter, it is all but impossible to keep close track of each unit. |

| 100,000s | UNIX/RISC workstations and Windows NT servers fall in this category.

Systems are increasingly like commodities. |

Table 6 Impact of installed base on controllability (circa 4Q

1997)

49

Age. Systems based on microprocessor technologies have product cycles that track the microprocessor cycles. At present, vendors are introducing new generations of their microprocessors approximately every 1-2 years. Over the course of each generation, vendors increase the clock speed a number of times. Consequently, the majority of units of a particular model are sold within two years of the product's introduction. Systems like the Cray vector-pipelined systems have product cycles on the order of 3-4 years.

As systems grow older, they are frequently sold to brokers or refurbishers and resold, without the knowledge or involvement of the original vendor. Most users keep their systems running productively for 34 years; it is difficult to amortize system costs in less time than this. However, a secondary market for rack-based systems is likely to start developing approximately two years following its first shipments. Desktop and deskside models may be found on secondary markets a year following introduction.

Vendor distribution networks. If systems are sold through a network of value-added resellers (VAR), distributors, OEMs, or other non-direct channels, no single individual or entity may have a complete understanding of the history of a particular system.

Price. Since markets (and size of the installed base) are often strongly correlated to price, systems with lower prices are likely to be less controllable than more expensive systems for at least three reasons. First, the lower the price of a target system, the greater the number of organizations at restricted destinations able to afford the system. The number of attempts to acquire a particular kind of system may increase. Second, vendors are willing to commit more resources towards controlling an expensive system than a cheap system. Profit margins are usually lower on the cheaper systems that occupy more price-competitive market niches. In addition, if a fixed fraction of the system price-say, 5'3'~were devoted to control measures, substantially more money would be available for this purpose from the sale of a $10 million system than from the sale of a $100,000 system. Third, customers prefer to have vendors install and upgrade expensive systems. The issue is risk management. The systems cost too much to risk an improperly conducted installation.

Based on these factors, systems can, at a given instant in time, be classified according to their controllability. Table 7 lists a sample of systems in each of four controllability categories. Costs have been rounded off. The installed base figures apply to most, but not necessarily all models in a given category.

Controllability of Performance Levels

Controllability of platforms has little to do, inherently, with performance. If a particular system has a high price, a small installed base, high dependence on the vendor for operations and

50

maintenance, etc., then the vendor is likely to be able to keep close track of that system. regardless of its performance. For example, the Cray YMP/1, as a platform, is still controllable. even though it has a performance of 500 Mtops, which is lower than the performance of many widely available uni- and dual-processor workstations. Efforts to prevent Cray YMP platforms from being diverted are likely to be successful, even though efforts today to prevent foreign acquisition of Cray YMP equivalent performance are unlikely to be successful.

What determines the controllability of a particular performance level? Principally,

1. the performance of systems available from foreign sources not supporting US export control policies,

2. the performance of uncontrollable platforms, and

3. the scalability of platforms.

51

| Vendor | Model/Processor | Size | Cooling | Year delivered |

Dependence on vendor |

Cost of Entry-level System (at introduction) |

Installed Base |

Distribution Network |

| CONTROLLABILITY: VERY HIGH | ||||||||

| Cray | T90 | non- standard chassis |

water | 1995 4Q | Total | $2.5 million | typically 10s |

Direct |

| Cray | T3D | non- standard chassis |

air | 1993 3Q | Total/High | $1 million | Direct | |

| Cray | T3E/600 | non- standard chassis |

water | 1996 | Total | Direct | ||

| CONTROLLABILITY: HIGH | ||||||||

| HP/ Convex |

Exemplar X-Class | non- standard chassis |

air | 1997 1Q | High | $200,000 | typically 100s |

Direct |

| Cray | J916 | non- standard chassis |

air | 1995 1Q | High | $200,000 | Direct | |

| Sun | UltraEnterprise 10000 | rack | air | 1996 | High/ Moderate |

$500,000 | Direct/ VAR |

|

| SGI | Origin2000 R10000/180 | rack | air | 1996 4Q | High/ Moderate |

$100,000 | Direct/ Dealership/ VAR |

|

| IBM | SP2 Power2 High Node | multiple racks |

air | 1996 3Q | High | $150,000 | Direct/ VAR |

|

| CONTROLLABILITY: MODERATE | ||||||||

| DEC | AlphaServer 8400 EV5 | rack | air | 1995 2Q | High/ Moderate |

$200,000 | typically 1000s |

Direct |

| SGI | Power Challenge L R10000/200 |

desk-side | air | 1996 1Q | High/ Moderate |

$100,000 | Direct/ Dealership/ VAR |

|

| SGI | Origin2000 R10000/180 | desk-side | air | 1996 4Q | Low | $30,000 | Direct/ Dealership/ VAR |

|

| Sun | UltraEnterprise 5000 | rack | air | 1996 | High/ Moderate |

$100,000 | Direct/ Dealership/ VAR |

|

| IBM | SP2 Power2/77 | multiple racks |

air | 1995 | High | $100,000 | Direct/ VAR |

|

| CONTROLLABILITY: LOW | ||||||||

| Sun | UltraEnterprise 3000 | desk-side | air | 1996 | Moderate/ Low |

$50,000 | Typically 10,000s |

Direct/ Dealership/ VAR |

| DEC | AlphaServer 2100 | short rack |

air | 1995 2Q | Low | $60,000 | Direct/ Dealership/ VAR |

|

| DEC | AlphaServer 4100 | desk-side | air | 1996 2Q | Low | $60,000 | Direct/ Dealership/ VAR |

|

| HP | HP 9000 4x0 | desk-side | air | 1995 | Low | $50,000 | Direct/ Dealership/ VAR |

|

Table 7 Examples of platform controllability (4Q 1997)

Performance of foreign systems. Systems available from foreign sources not cooperating with US export control policies may also be considered uncontrollable platforms. Foreign availability has traditionally been an important element of the export control debate. Separating out this category of uncontrollable platforms is analytically helpful, since it adds to the set of uncontrollable platforms systems that are uncontrollable not because of their technical or market

52

qualities, but because of their point of origin Today, the systems developed indigenously in countries outside the United States, Japan, and Western Europe are not commercially competitive with U.S. systems, nor do they have particularly high levels of performance. Table 8 shows some of the leading systems from Tier 3 countries.

| Model | Country | Organization | Year | Description | Peak Mflops | Mtops (est.) | Units installed (est.) |

| MVS-100 | Russia | Kvant Scientific Research Institute |

1995 | 32 i860s | 2400 | 1,500 | -20 |

| MP-3 | Russia | VNII Experimental Physics | 1995 | 8 i860s | 600 | 482 | <20 |

| MP-XX | Russia | VNII Experimental Physics | 1997? | 32 Pentium (Pro) | 6400 | 5,917 | pending? |

| Param-9000 | India | Center for the Development of Advanced Computing |

1995 | 64(?) SuperSparc II/75 (200 max) |

4800 (15,000 max) |

3.685 | <30 |

| Param-10000 | India | Center for the Development of Advanced Computing |

1998 | UltraSparc | |||

| Pace-2 | India | Defense Research and Development Laboratories |

2,000 (max | <10 | |||

| Pace+ | India | Defense Research and Development Laboratories |

1998? | 30,000 (max) | |||

| Dawning-1000 | PRC | National Research Center for Intelligent Computing Systems |

1995 | 32 i860 | 2400 | 1,500 | |

| Galaxy-II | PRC | National Defense University of Science and Technology |

1993 | 4 CPUs | 400 | <20 | |

| Galaxy-III | PRC | National Defense University of Science and Technology |

1997 | 128 Processor (max) | 13000 (max | <10 | |

Table 8 Selected HPC systems from Tier 3 countries

The table illustrates that while a few countries do have established HPC programs, their indigenous systems are not in widespread use and, for the most part, have performances under 4,000 Mtops, usually far below this level. It is likely that the Russians are able to construct a system employing 32 Pentium Pro/200; it is not clear whether they have in fact done so. The country continues to have difficulty financing development projects.

53

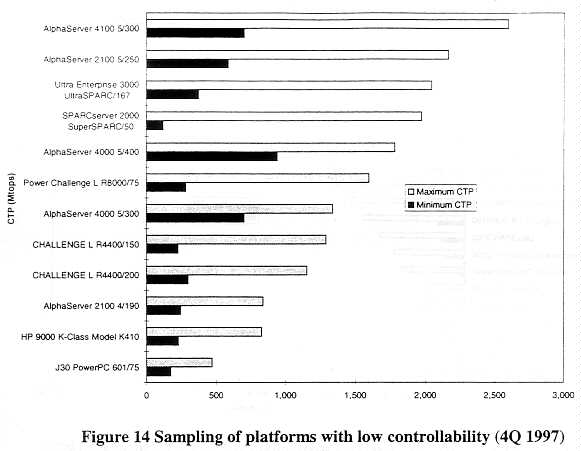

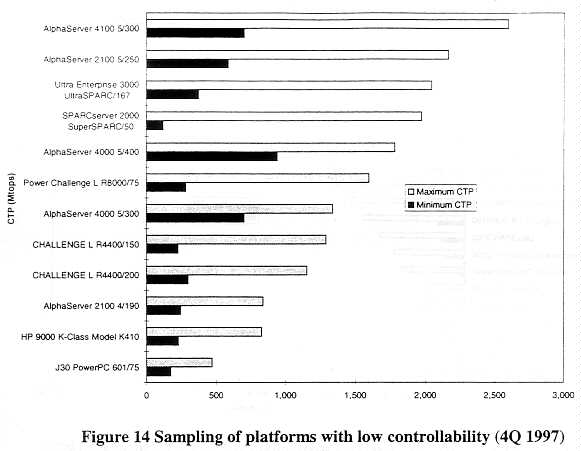

Performance of uncontrollable platforms. The performance level provided by the most powerful uncontrollable system is, clearly, uncontrollable. The fact that there may be controllable platforms with lower performance (e.g., the Cray YMP example) does not negate this reality. Figure 14 and Figure 15 show the performance of a number of systems that have low and moderate controllability, circa 4Q 1997.

A number of the systems in Figure 15, such as the AlphaServer 4100/466, the PowerChallenge L R10000/200, the IBM J50 and various HP K-Class desk-side servers will slip into the Low Controllability category in 1998 on the strength of their installed base. These systems have CTP levels in the 4000-5000 Mtops range.

54

Scalability of Platforms. Scalability of platforms, the third determinant, makes it possible to increase the performance of a system incrementally by adding computational resources, such as CPUs, memory, I/O, and interconnect bandwidth, rather than by acquiring a replacement system. The controllability of performance levels is strongly affected when the range of scalability is large, and users without the assistance of vendor representatives can add computational resources in the field.

To see this, we must draw a distinction between several different kinds of performance.

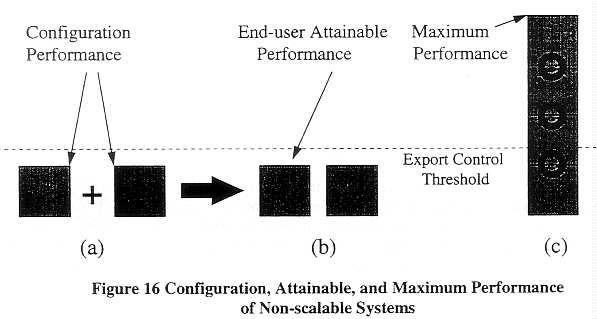

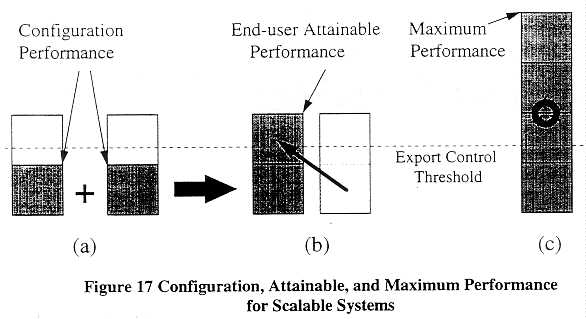

1. Configuration performance. The configuration performance is the CTP of a specific configuration to be shipped to a customer. This is the performance that is currently used to determine if a system requires an individually validated license. If an IVL is required, the configuration performance is included in the export license application.

2. Maximum performance. The maximum performance is the CTP of the largest configuration of a system that could be constructed given the necessary resources, vendor support, and specialized technologies. All commercial systems today have a maximum number of processors, a limit established by design. For example, a Cray C90 has a maximum configuration of sixteen processors (21,125 Mtops). The SGI Origin2000 has a maximum configuration of 64 processors, with a maximum performance of 23,488 Mtops. (The Cray

55

Origin2000 includes a specialized Metarouter that enables scaling to 128 processors.) The DEC Alpha 4100 can be configured with up to 4 processors (4,033 Mtops).

3. End-user attainable performance. The end-user attainable performance is the performance of the largest configuration of an individual, tightly coupled system an end-user could assemble without vendor support, using only the hardware and software provided with lesser configurations. This definition does not apply to more loosely coupled agglomerations such as clusters. By "lesser configuration'' we usually mean a configuration that can be exported under existing export control policies.

The scalability of a system has enormous significance for the relationship of these three quantities. Figure 16 illustrates their relationship when systems are not scalable. Suppose (a) represents two uni-processor configurations of a hypothetical vector-pipelined system that the vendor sells in configurations between one and four processors. Each uni-processor configuration falls below the control threshold, but dual- and four-way configurations lie above the threshold. Upgrading from one to two or four processors requires substantial vendor involvement and specialized technology, represented by the circular arrows in (c). When systems cannot be upgraded in the field, upgrading requires the complete replacement of one configuration by another. The diagram illustrates that if two uni-processors are exported, the result is two uni-processor systems. Without the specialized expertise and technology provided by the vendor, it is not possible to combine two uni-processor systems into a dual-processor system. In this case, the attainable performance is equivalent to the configuration performance.

Figure 17 illustrates the three concepts when systems are easily scalable. Suppose there is a hypothetical rack-based parallel processing system where each rack can have up to 16 processors. Two racks can be integrated into a maximum configuration system (32 processors), but only by the vendor, who must install, say, a proprietary high-speed switch represented by the circular arrows shown in (c). Within a rack, CPU and I/O boards can be easily added or removed without

56

specialized expertise or technology. Further suppose that an eight-processor configuration has a performance below the export control threshold. while a full rack's performance exceeds it.

Under these conditions, the configuration performance and end-user attainable performance are quite different. Two half-full racks may be exported perfectly legitimately under general license (a). Once in place, the CPU, memory, and I/O boards can be moved from one rack to the other as shown in (b) without vendor knowledge or assistance. The resulting configuration would have the same computational capacity (aggregate operations per second and volume of memory) as the two originals, but significantly greater capability as measured in Mtops. Acquiring additional half-full racks would not help the user attain the maximum performance (c), however, since individual racks are not shipped with a high-speed switch.

Scalability and the Export Control Regime

The trend toward scalable architectures causes fundamental problems for the export regime as currently formulated. The regime relies on a single technological feature, configuration performance, to distinguish those systems that can provide foreign entities with a particular level of computational capability from those that cannot. In other words, the control regime tries to restrict the performance an end-user can attain by placing restrictions on configuration performance.

When systems are not scalable, the current regime works well. Configuration performance and end-user attainable performance are identical, and controlling the former results in effective controls over the latter. Under these circumstances, the challenge for policy-makers is to identify which platforms are uncontrollable and set the control threshold at or above their performance.

When systems are readily scalable, the current regime becomes unstable at many control thresholds. For example, if the control threshold is set, as in Figure 17, above the performance of

57

a small configuration scalable system but below the end-user attainable performance of that same system. then the policy does not prevent foreign entities from acquiring computer power greater than the threshold. The end-user attainable performance is uncontrollable for reasons that have little to do with the controllability of the platforms themselves and everything to do with the scalable nature of the architecture and construction. From a policy perspective the only stable thresholds are those that are below the minimum configuration performance or at or above the attainable performance. Neither is entirely satisfactory.

Control thresholds set below the performance of small configuration scalable systems are not viable. The nature of most high-performance computing systems manufactured today is that their smallest configurations are one or two processor systems. This level of performance, ranging today from roughly 200 to 2000 Mtops, depending on the processor, is filled with uncontrollable platforms. According to Dataquest, a market research firm, nearly 200,000 UNIX-RISC systems were shipped during the first quarter of 1997 [3]. According to International Data Corp. (IDC), over 600,000 UNIX/RISC workstations where shipped in 1997 [4]. Each of these systems is above 200 Mtops, and most are likely to be above 500 Mtops. By the end of 1998, nearly all UNIX-RISC systems shipped will be above 500 Mtops, and dual- or quad-processor systems above 2000 Mtops will be commonplace.

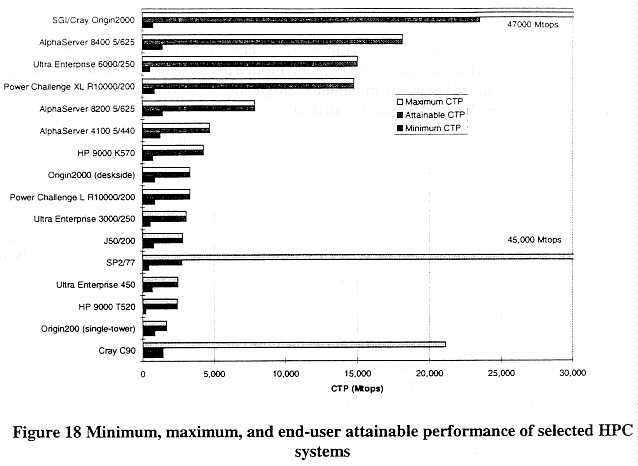

Unfortunately, setting controls marginally higher than the performance of workstations does not prevent foreign entities from attaining significantly higher performance levels under the current control regime. Figure 18 illustrates the end-user attainable performance for several systems introduced within the last two years. Each of these systems has a minimum configuration at the one- or two-processor level that could be shipped under general license.

58

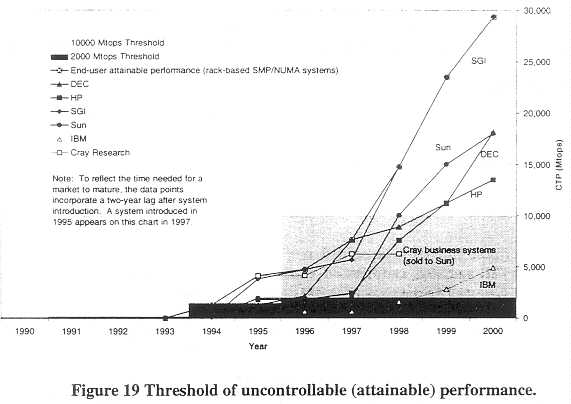

Figure 19 shows how the level of end-user attainable, or uncontrollable performance has risen during the 1990s. The graph incorporates a time lag to allow for markets to mature. A system introduced in 1996 is plotted in 1998. The systems reflected in the graph are mostly rack-based systems with very easy scalability whose minimum configurations lie beneath control thresholds. The rapid rise in performance has been fueled by a combination of increasing processor performance and, to a lesser extent, increasing numbers of processors in a system. The atmospheric performance of the SGI systems reflects the ability to link together multiple SGI racks into a single, integrated, shared memory system using no more than cables.

59

A fundamental difficulty is that the present control regime uses the configuration performance as a surrogate for end-user attainable performance during licensing considerations. When systems are easily scalable, these two values can be quite different, and the control policy may fail to achieve its objective.

An alternative approach is to take into account the end-user attainable performance of a system during licensing considerations rather than the configuration performance. Under such circumstances, export licensing decisions are made directly on the basis of what the policy seeks to control in the first place: the performance a foreign entity can attain by acquiring U.S. computer technology. No surrogate values are needed.

The following example illustrates one of the principal differences between control regimes based on configuration and end-user attainable performance. Suppose a vendor has two systems it seeks to export. Both systems use the same components, but one system can be scaled easily to eight processors; the other, to 16. The two customers have requested four-processor versions of the two models. Further suppose that the control threshold is set at a performance level equivalent to an eight-processor configuration. Under the current regime, each configuration could be exported under general license because the configuration performance of each lies below the control threshold. However, one customer would be able to scale the system to a performance level well above the threshold without ever having had the export subject to review by licensing authorities.

60

Although they have the same configuration performance, the two systems would be treated differently under a regime based on end-user attainable performance. Under this regime, the system scalable to eight processors could be exported under general license, but the other system would require an individually validated license and be subject to review by licensing officials.

Barriers to scalability

At the heart of the concept of end-user attainable performance is the extent to which end-users can scale smaller systems into larger ones. There are at least three factors that may prevent end users from scaling systems and thereby limit the attainable performance:

Specialized expertise continues to be needed to upgrade some of the massively parallel systems, such as the Cray T3D and T3E, and HP/Convex Exemplar machines, and to upgrade parallel vector-pipelined systems like the Cray C90, T90, or J90. Even the IBM SP2 requires rather substantial expertise when new racks of nodes are added. Specialized expertise is usually not required, although it may be customary for SMP system upgrades.

Limitations in the operating system and interconnect have placed ceilings on scalability. The operating system provides an array of services to applications software including process management, I/O management, and file management. For a system to be fully scalable, these services, as well as the hardware, must be scalable. While considerable progress has been made in scalable operating systems, limitations continue to exist, especially for operating systems supporting shared-memory architectures. Although some systems support shared-memory architecture for up to 64 processors, such processor counts have not been typical in the industry. Systems based on Windows NT still do not scale well beyond eight processors, although this number is increasing.

Specialized hardware is sometimes required when scaling beyond the smaller configurations. For example, up to four Origin2000 racks with a total of 64 processors can be joined together using just cables. Scaling past 64 processors, however, requires a "metarouter," a rack-based unit with a sophisticated proprietary interconnect manufactured only by Cray. The number of systems requiring a metarouter is likely to be quite limited, probably not more than a few hundred.

For SMP-class systems, the most common barrier to scalability is the size of the chassis. Desktop, desk-side, and rack-based models may all incorporate precisely the same component base. Indeed, most computer companies try to leverage the same or similar components throughout their product lines in order to save on development and production costs. However, a desk-side system with room for four or six processors cannot be scaled to 16 or 32 processors.

Intentionally, we limit our definition of end-user attainable performance to integrated, tightly coupled systems provided by HPC vendors. Although it has been clearly demonstrated that competent users can cluster together multiple workstations using readily available interconnects

61

and create systems with substantial computing power [5], determining and applying the end-user attainable performance in such contexts is problematic. First, it would be very difficult to determine the end-user attainable performance in an uncontroversial manner. The performance of clusters is highly variable, especially as they grow in size. What are the limits to scalability? When does performance begin to degrade unacceptably? These are open research questions today. Second, all computing systems sold today can be networked. The end-user attainable performance of all platforms would degenerate into an ill-defined large number that is of little practical use for licensing officials. The consequence of limiting the discussion of end-user attainable performance to individual, tightly coupled systems is that some end users will, in fact, cluster together systems and perform useful work on them. Since clusters are only as controllable as their most controllable elements, many kinds of clusters will be beyond the reach of the export control policy. The policy is likely to "leak." This will be a fact of life in today's world. We discuss "leakage" of the policy in the final chapter.

Establishing a Lower Bound

Any of the factors mentioned above-specialized software, hardware, or expertise-may be taken into account when considering a system's end-user attainable performance. Assuming that the end-user attainable performance of systems can be determined, how should the lower bound of controllability be determined? As before, the lower bound of controllability should be the greater of: (a) the performance of systems widely available from countries not supporting U.S. export control policies, and (b) the end-user attainable performance of uncontrollable platforms.

Table 9 offers a classification of computing systems. The end-user attainable performance figures are those of systems introduced in 1997. Each category has different controllability features; controllability decreases as one moves down the table.

| Type | Units installed | Price | End-User Attainable performance |

| Multi-Rack HPC systems | 100s | $750K-10s of millions | 20K+ Mtops |

| High-end rack servers | 1,000s | $85K-1 million | 7K-20K Mtops |

| High-end deskside servers | 1,000s | $90-600K | 7K-11K Mtops |

| Mid-range deskside servers | 10,000s | $30-250K | 800-4600 Mtops |

| UNIX/RISC workstations | 100,000s | $10-25K | 300-2000 Mtops |

| Windows NT/Intel servers | 100,000s | $3-25K | 200-800 Mtops |

| Laptops, uni-processor PCs | 10s of millions | $1-5K | 200-350 Mtops |

Table 9 Categories of computing platforms (4Q 1997)

The classification presented here, when used in conjunction with the concept of end-user attainable performance, makes it possible to use a performance-based metric to distinguish more precisely than before systems. that are more controllable from those that are less controllable. For example, a control threshold set at 4-5,000 Mtops would permit the export of mid-range systems

62

under general license while subjecting rack-based systems, even small configuration racks, to extra licensing considerations A threshold set at 2500 Mtops would restrict most RISC-based desk-side systems, while permitting the export of desktop machines under general license.

The determination of which category of systems is, in fact, uncontrollable depends on the amount of resources industry and government are willing to bring to bear on monitoring and control measures. Suppose the lower bound of controllability were determined to lie with the deskside machines. Figure 20 shows the anticipated performance of such systems through the turn of the century. The mid-range systems have been plotted one year following their introduction since the installed base grows rapidly, but not instantly. Rack-based systems, defining the line of uncontrollable performance under the current control regime, have been plotted two years after introduction to account for the slower growth of their installed base. The systems contributing to the high-end deskside systems have been plotted either one or two years after introduction, depending on the rate at which their installed base grows.

Projections of when new products will be introduced and at what performance are based on two assumptions: First, the maximum number of processors in a desk-side box will remain constant. Second, the time lag between volume manufacture of a new microprocessor and its appearance in desk-side systems will remain the same as at present. Under these assumptions, the end-user attainable performance is likely to approach 4300 Mtops in 1998, 5300 Mtops in 1999, and 6300 Mtops in the year 2000. We should note that this trend line is consistent with the

63

administration's decision in 1995 to set a control threshold of 2,000 Mtops for Tier 3 military end-users. This was approximately the performance of desk-side mid-range systems in 1995/1996.

While this chart reflects approximately linear increases in mid-range system performance through the end of the century, there is a strong possibility that their performance will increase sharply in 2000-2001 (factoring in the one-year time lag). As new generations of processors, principally Intel's Merced, are incorporated into servers, mid-range systems with four- or eight- processors may have CTP values well above 15,000 Mtops. These systems will constitute the "sweet spot" of the mid-range market and have installed bases on the order of tens or even hundreds of thousands of units.

Unlike in past years, control thresholds that discriminate between categories of computer systems do not discriminate between HPC vendors. In today's HPC market there are no major HPC vendors whose sole business is the highest-end machines. Cray was acquired by Silicon Graphics, Inc.; and Convex, by Hewlett-Packard. Recently, Compaq announced its acquisition of Digital Equipment Corporation. Each of the remaining U.S. HPC vendors now offers a broad product line "from desktops to teraflops" based on the same components. Each vendor has small boxes targeting the very large lower-end HPC markets. Moreover, Figure 20 indicates that the vendor offerings in these markets have quite comparable performances as measured by the CTP, so each is competitive in export markets.

Conclusions

In the preceding discussion we have tried to answer the question, "What is the lower bound of a viable control threshold?" A control threshold as established in the past, seeking to control the performance available to an end-user by controlling the performance of shipped configurations works well when systems are not easily scaled to higher levels of performance by the end user. A dominant characteristic of most of today's high-performance computing systems is that they are easily scalable, up to a point. A control threshold based solely on the performance of specific configurations being shipped is stable only when set below the minimum configuration performance or above the highest end-user attainable performance of such scalable systems. Neither option is satisfactory, for it demands setting the control threshold at unenforceable low levels, or undesirable high levels.

An alternative control regime would call for export licensing decisions based not on the configuration performance of a system being shipped, but on the end-user attainable performance of that system. The attainable performance is defined as the performance of the largest individual, tightly coupled configuration an end user can assemble without vendor support using only the hardware and software provided with lesser configurations. By "lesser configuration" we usually mean a configuration that can be exported under existing export control policies.

64

References

[1] Goodman, S. E., P. Wolcott, and G. Burkhart, Building on the Basics: An Examination of High-Performance Computing Export Control Policy in the 1990s, Center for International Security and Arms Control, Stanford University, Stanford, CA, 1995.

[2] Goodman, S., P. Wolcott, and G. Burkhart, Executive Briefing: An Examination of High-performance Computing Export Control Policy in the 1990s, IEEE Computer Society Press, Los Alamitos, 1996.

[3] NT Has Cut Into UNIX Workstation Mkt Says Dataquest, HPCWire, Jun 20. 1997 (Item 11401).

[4] Gomes, L., "Microsoft, Intel to Launch Plan to Grab Bigger Piece of Market for Workstations," Wall Street Journal, Mar 6, 1998, p. B3.

65

[5] Sterling, T. et al, Findings of the first NASA workshop on Beowulf-class

clustered computing, Oct. 22-23, 1997, Pasadena, CA, Preliminary Report,

Nov. 11, 1997, Jet Propulsion Laboratory.